The iPad was meant to revolutionize accessibility. What happened?

In December 2022, a few months after learning that he’d won an Iowa Arts Fellowship to attend the MFA program at the University of Iowa, David James “DJ” Savarese sat for a televised interview with a local news station. But in order to answer the anchorman’s questions, Savarese, a 30-year-old poet with autism who uses alternative communication methods, needed to devise a communication hack. He’d been coming up with them since early in his childhood.

Savarese participated remotely, from his living room couch in Iowa City. “What motivates you to write your poetry?” the news anchor asked. Watching the interview online, I could see Savarese briefly turn his head away from the camera, as if to compose a thought. He leaned in toward his laptop, a MacBook Pro that doubles as his communication device, and tapped a few keys to activate a synthesized voice. “Poetry offers me a way to answer less and converse more.”

“At a time when fear dominates the airwaves,” he continued, “poetry reawakens the senses and dislodges us from a strictly meaning-based experience, freeing ideas to mingle across boundaries of the brain and moving us beyond artificial, classificatory constructions of power.” He swayed and nodded to his sentences, which rose and fell like music.

I shifted my attention to trying to figure out Savarese’s technical setup. I didn’t see any wires or devices in the frame. Was he using a brain chip to wirelessly transmit his thoughts to a word-processing application on his computer? I wondered, “Am I looking at the future here?”

There was a reason for my particular focus: at the time, I’d been researching augmentative and alternative communication (AAC) technology for my daughter, who is five years old and also non-speaking. Underwhelmed by the available options—a handful of iPad apps that look (and work) as if they were coded in the 1990s—I’d delved into the speculative, more exciting world of brain-computer interfaces. Could a brain chip allow my daughter to verbally express herself with the same minimal effort it takes me to open my mouth and speak? How might she sound, telling me about her day at school? Singing “Happy Birthday” or saying “Mama”? I wanted the future to be here now.

But watching Savarese revealed magical thinking on my part. Behind the curtain, the mechanics of his participation were extremely low tech—kind of janky, in fact.

The process didn’t fit the mold of what I thought technology should do: take the work out of a manual operation and make it faster and easier. The network had invited Savarese onto the program and a producer had emailed the questions to him in advance. To prepare, Savarese had spent about 15 minutes typing his answers into a Microsoft Word file. When it came time for the live interview, the anchorman recited the questions, to which Savarese responded on his MacBook by using Word’s “Read Aloud” function to speak his pre-composed answers. The types of readily available technology that could power an assistive communication device—AI, natural-language processing, word prediction, voice banking, eye-gaze tracking—played no role here. And yet, without any of the features I’d expected to see, Savarese had the tools he needed to express the fullness of his thoughts.

Six years into the wild, emotionally draining ride of raising a disabled child in an ableist world, I’ve learned to keep my mind open to every possibility. While my daughter is intellectually and physically delayed—she was born missing 130 genes and 10 million base pairs of DNA—she is a healthy, joyful, and opinionated child. Since she came into our lives, I have been amazed over and over that simple things that would make her life easier—curb cuts, thicker crayons, kindness—aren’t more prevalent in our advanced society. But we do have a range of more sophisticated and inventive things I didn’t think would ever exist—calming VR environments built into Frozen-themed MRI scanners, for example. So I hope it makes sense that initially, while watching Savarese speak, I assumed he was using an implanted brain chip to automatically transmit his thoughts into a Word doc. That just seemed to me more plausible than revolutionary. When I realized there was no brain chip—just a few emails and Microsoft Word—a familiar sense of disappointment landed me back on Earth with a thud.

“Everything moves slowly because it has to be compatible with the past, which means if the past was kind of clunky, part of the present is kind of clunky too,” Mark Surabian, an AAC consultant, told me.

Surabian, 61, has trained administrators and speech therapists at hundreds of schools for 35 years. He has taught graduate students at nearly every special-education teacher training program in New York City, including those at Columbia Teachers College and New York University. He specializes in customizing off-the-shelf AAC apps to meet the specific needs of his own clients and has made a career of being the indispensable “tech guy” who partners with speech and language pathologists, teachers, and neuropsychologists to find the right hardware and software for their clients.

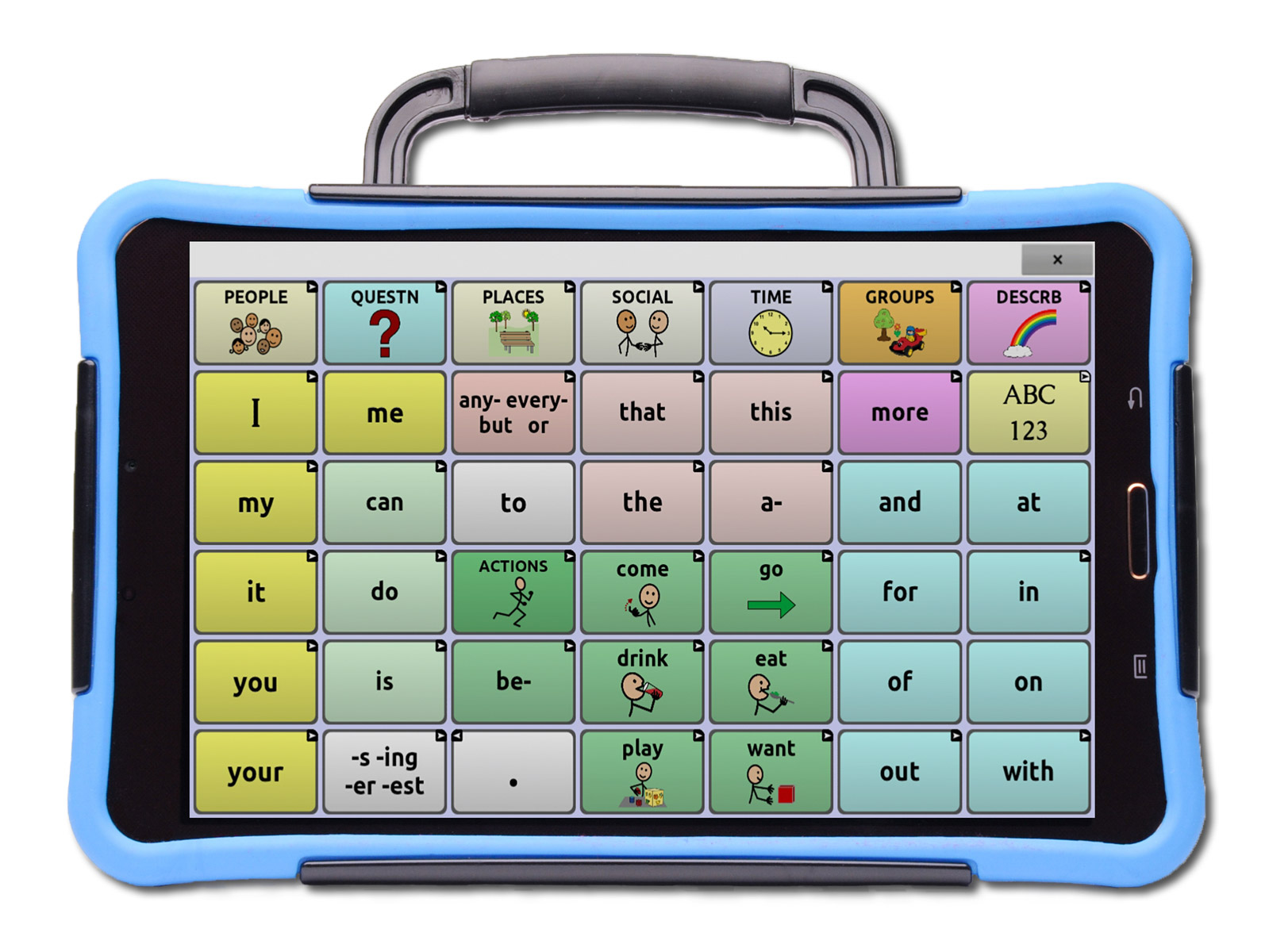

The default TouchChat display of PRC-Saltillo’s communication device, for example, consists of 12 rows of eight buttons displaying a mix of letters, object icons (“apple”), category icons (“food”), and navigation elements (back arrows)—many of them in garish neon colors. Part of what I find infuriating about the interface is how it treats every button similarly—they’re all the same size, 200 by 200 pixels, and there’s no obvious logic to button placement, text size, or capitalization. Some words are oddly abbreviated (“DESCRB”) while others (“thank you”) are scaled down to fit the width of the box. The graphic for “cool” is a smiling stick figure giving a thumbs up; aside from the fact it’s redundant with “good” (a hand-only thumbs up), “yes,” and “like” (both smiley faces), what if the user means cool in temperature?

Established principles of information hierarchy and interface design for AAC devices aren’t standard—it’s up to Surabian to define the number and size of buttons on each screen, as well as icon size, type size, and whether a button’s position should change or remain fixed.

“Everything moves slowly because it has to be compatible with the past, which means if the past was kind of clunky, part of the present is kind of clunky too.”

Mark Surabian, AAC consultant

I’d called Surabian in hopes of being wowed. When he and I met up at a café in lower Manhattan, I got excited by the rolling briefcase by his side, thinking he might show me the coolest stuff happening in AAC. But I was again underwhelmed.

Because the reality is this: the last major advance in AAC technology happened 13 years ago, an eternity in technology time. On April 3, 2010, Steve Jobs debuted the iPad. What for most people was basically a more convenient form factor was something far more consequential for non-speakers: a life-changing revolution in access to an attractive, portable, and powerful communication device for just a few hundred dollars. Like smartphones, iPads had built-in touch screens, but with the key advantage of more space to display dozens of icon-based buttons on a single screen. And for the first time, AAC users could use the same device they used for speaking to do other things, like text, FaceTime, browse the web, watch movies, record audio, and share photos.

“School districts and parents were buying an iPad, bringing it to us, and saying ‘Make this work,’” wrote Heidi LoStracco and Renee Shevchenko, two Philadelphia-based speech and language pathologists who worked exclusively with non-speaking children. “It got to the point where someone was asking us for iPad applications for AAC every day. We would tell them, ‘There’s not really an effective AAC app out there yet, but when there, is, we’ll be the first to tell you about it.’”

A piece of hardware, however impressively designed and engineered, is only as valuable as what a person can do with it. After the iPad’s release, the flood of new, easy-to-use AAC apps that LoStracco, Shevchenko, and their clients wanted never came.

Today, there are about a half-dozen apps, each retailing for $200 to $300, that rely on 30-year-old conventions asking users to select from menus of crudely drawn icons to produce text and synthesized speech. Beyond the high price point, most AAC apps require customization by a trained specialist to be useful. This could be the reason access remains a problem; LoStracco and Shevchenko claim that only 10% of non-speaking people in the US are using the technology. (AAC Counts, a project of CommunicationFIRST, a national advocacy organization for people with speech disabilities, recently highlighted the need for better data about AAC users.)

There aren’t many other options available, though the possibilities do depend on the abilities of the user. Literate non-speakers with full motor control of their arms, hands, and fingers, for example, can use readily available text-to-speech software on a smartphone, tablet, or desktop or laptop computer. Those whose fine motor control is limited can also use these applications with the assistance of an eye-controlled laser pointer, a physical pointer attached to their head, or another person to help them operate a touch screen, mouse, or keyboard. The options dwindle for pre-literate and cognitively impaired users who communicate with picture-based vocabularies. For my daughter, I was briefly intrigued by a “mid-tech” option—the Logan ProxTalker, a 13-inch console with a built-in speaker and a kit of RFID-enabled sound tags. One of five stations on the console recognizes the tags, each pre-programmed to speak its unique icon. But then I saw its price—$3,000 for 140 tags. (For context, the National Institutes of Health estimates that the average five-year-old can recognize over 10,000 words.)

It’s hard to think that non-disabled consumers would be expected to accept the same slow pace of incremental improvement for as essential a human function as speech.

I am left to dream of the brain-computer interfaces heralded as the next frontier—implants that would send signals from the central nervous system straight to a computer, without any voice or muscular activation. But the concept of BCIs is riddled with significant and legitimate ethical issues. Partly for that reason, BCIs are too far off to take seriously right now. In the meantime, the mediocre present, it’s hard to think that non-disabled consumers would be expected to accept the same slow pace of incremental improvement for as essential a human function as speech.

In the late 2000s, AAC companies braced themselves for a massive shift. When I talked to Sarah Wilds, chief operating officer for PRC-Saltillo, she described the mood at the company’s annual meeting in 2008, the year she began working there.

“We’re all speech pathologists [together] in this room,” Wilds told me, “and somebody said, ‘There’s this thing called the iPod Touch.’ And everyone said, ‘We have computers. Why would anyone want a small screen?’”

“The next year, people said, ‘Oh my gosh, they’re doing it—and what if those screens get bigger?’ And the year after that, the iPad was here. We were all horrified that anyone could get to the store, pick one out, and put an app on it.”

When I asked Wilds how many people use PRC-Saltillo’s products, she demurred, noting that the company is private and doesn’t publicly disclose its balance sheets. About the landscape in general, she offered this: customer demand for apps developed by AAC companies is generally 10 times greater than demand for their hardware products. Oh, and about that “special dedicated communication hardware device”—it’s probably an iPad.

In fall of 2022, when I set out to find a device for my daughter, her speech therapist referred me to AbleNet, a third-party assistive technology provider. (Public school districts in the US can also procure AAC devices on behalf of students who need them, but at the time, my daughter wasn’t eligible because the provision wasn’t listed in her individualized education program.) An AbleNet representative sent me a quote for a Quick Talker Freestyle, an “iPad-based speech device” for which they would bill our health insurance $4,190. The “family contribution,” the representative reassured me, would be only $2,245.

For that price, surely the Quick Talker Freestyle would offer something more advanced than an app I could download onto the refurbished iPad I’d already bought from Apple for $279? (A new ninth-generation iPad retails for $329.) When I pressed for details, I was handed off to a benefits assistance supervisor, who suggested I sign up for the company’s payment plan. (I did not.) I would later learn that in order to comply with Medicaid and health insurance requirements, third-party AAC technology providers usually strip Apple’s native applications off the iPads they distribute—making them less useful, at 10 or 20 times the price, than the same piece of hardware that I (or anyone else) can buy off the shelf.

Horrified, that is, because they assumed the new technology would radically undercut the price of the kinds of tools they were making and selling. AAC developers, in stark contrast to a company like Apple, weren’t set up to sell their software directly to the masses in 2010. The small handful of AAC players in the US—PRC-Saltillo and Dynavox were the largest—had been running modest operations making and selling programs pre-loaded onto their proprietary hardware since the 1980s (though PRC introduced its first device in 1969). Their founders, former linguists and speech therapists, were optimistic about the technology’s potential; they were also realists who recognized that assistive communication would never be a booming business. The assistive communication market was, and still is, relatively small. According to the National Institute on Deafness and Other Communication Disorders, between 5% and 10% of people living in the US have a speech impairment, and only a small fraction of them require assistive devices.

When I reached out to AbleNet for comment, Joe Volp, vice president of marketing and customer relations, detailed customer-service benefits built into the pricing, including a device trial period, a five-year unlimited warranty, and quick turnaround for repairs. Those are nice options, but they don’t come close to justifying the markup.

I told Wilds about my experience; she sounded genuinely sympathetic. “I think it’s very difficult to be an app-only company in the world of assistive technology,” she explained. AAC companies, she suggested, need to sell expensive hardware to fund the development of their apps.

Most AAC companies were started by experts in technology development, not distribution. For at least the last three decades, they’ve developed their products as FDA-approved medical devices to increase the chances that Medicaid, private health insurers, and school districts will pay for them. To compensate for the small market size, developers backed into a convoluted business model that required a physician or licensed speech pathologist to formally “prescribe” the device, strategically (and astronomically) priced to subsidize the cost of its development.

Before 2010, companies building their own AAC hardware and software from scratch could get away with keeping their pricing strategy opaque. But when Apple mass-produced a better version of the hardware they’d been developing in house, the smoke and mirrors disappeared, exposing a system that looks a lot like price gouging.

But it would be too easy to call AAC companies the villain; considering they operate in the same market-driven system as the most popular consumer technology products in the world, it’s a minor miracle they even exist. And the problem of jacking up products’ prices to cover the cost of their development is hardly unique. All too often—in the absence of a public or private entity that proactively funds highly specialized technology with the potential to change, even save, a small number of lives—some form of price gouging is the norm.

Perhaps it’s my expectations that are flawed. It may be naïve to expect affordable, groundbreaking AAC technology to spring from the same conditions that support billions of people selling makeup and posting selfies. Maybe there needs to be an altogether separate arena, complete with its own incentives, for developing technology products aimed at small populations of people who could truly benefit from them.

In March, Savarese agreed to meet me online for a live conversation. (From our email exchanges, I sensed he was most comfortable communicating asynchronously, in writing.) I sent him a list of questions in advance. I wanted his thoughts on some not-easy topics, and I didn’t want the inconvenience of the live medium to limit what he had to say.

A few minutes before our scheduled meeting, Savarese emailed me an eight-page Word document, into which he’d copied my questions and added his answers. I recognized my own questions as well as a few of his responses—a mix of passages quoted from his previous writings and some new material he’d typed in.

“Imagining that technology alone can liberate us is a bit shortsighted and, in some ways, disabling.”

David James “DJ” Savarese

One question about AAC devices that Savarese added to my list: “Have they improved?” To which he replied, “Sure: lighter, less expensive, consolidated all my word-based communication and work needs into one smallish device (laptop or iPad). The subtlety of voices is improving although I’ve chosen to use the same voice throughout the years and cannot come close to replicating what a poem I wrote should sound like without coaching and recording a trusted, speaking poet reading them aloud.”

Given the existence of the Word document—which was essentially a transcript of an interview I was about to conduct—what would be the purpose of our live conversation? I suggested to Savarese that I might read the document aloud—the words I’d written myself, at least. It felt invasive to use my voice to read Savarese’s responses; he gets understandably frustrated by the tendency of others to speak for him.

Instead, I asked him to paste his pre-written answers into the chat window or to use the Read Aloud function to speak them. Over the course of the interview, I paraphrased his responses and asked follow-up questions to ensure I was interpreting him correctly. I followed his lead in alternating between modes of communication.

In this way, Savarese discussed his personal history of AAC communication. As a six year-old, he’d learned sign language alongside his non-disabled parents. In elementary school, they equipped him with a $17 label maker from Staples to teach him sight words and help him learn to read and type. In fourth grade, Savarese started typing out his thoughts on a personal computer called the Gemini, a souped-up $12,000 version of a late-’90s Apple laptop.

The Gemini required plug-in power and a teacher to help him move it. In keeping with the Medicaid requirements (which force manufacturers to remove features that might tempt parents to use a device themselves), it had no word processor or internet access. Savarese adapted by continuing to use his label maker to fill out tests and write poems and stories. To write faster, he assembled pre-printed paper strips of commonly used words and phrases, pulled from a word bank made by his mother.

“It was at the time a big deal for me to have what seemed like my very own computer,” Savarese told me. “So that status sort of outweighed the inconvenience.”

Savarese looks back on his early AAC years with surprising wistfulness: “Instead of insisting I join their speaking world,” he told me, “my parents learned these new languages with me … These technologies were more multisensory, more communal, and in a sense more democratic; in pictures, sign language, and tangible sight words, my parents, teachers, friends, and I were all learners, all teachers.” By the time he was in 10th grade, text-to-speech software was finally ubiquitous enough to come pre-installed on a laptop—a single lightweight device that gave Savarese the ability to communicate both silently and out loud.

“Imagining that technology alone can liberate us is a bit shortsighted and, in some ways, disabling,” he typed to me. But he does have a wish list of improvements, starting with a louder voice built into laptops, tablets, and smartphones so others can hear him better. He’d like slide presentation software with customizable script-reading options, so users can sync their voiceover with the changing slides. He also thinks that word prediction has a long way to go to become truly useful—and less prescriptive.

Yet Savarese’s attitude toward AAC technology remains realistic, even generous. “I live in a speech-centric society/world, so I have to have a speech-based way to communicate to the majority of people,” he told me. “I am able to make a living as a writer, filmmaker, presenter, collaborator, and activist (inter)nationally. My AAC devices have offered me the public voice I needed to exist as an essential member of these communities.”

The payoff for Savarese, and for his audiences, is big: “I think it’s maybe gotten better for me as I’ve gotten older. People are invested in hearing what I have to say.”

Julie Kim is a writer based in New York City.