Quantum computing is taking on its biggest challenge: noise

In the past 20 years, hundreds of companies, including giants like Google, Microsoft, and IBM, have staked a claim in the rush to establish quantum computing. Investors have put in well over $5 billion so far. All this effort has just one purpose: creating the world’s next big thing.

Quantum computers use the counterintuitive rules that govern matter at the atomic and subatomic level to process information in ways that are impossible with conventional, or “classical,” computers. Experts suspect that this technology will be able to make an impact in fields as disparate as drug discovery, cryptography, finance, and supply-chain logistics. The promise is certainly there, but so is the hype. In 2022, for instance, Haim Israel, managing director of research at Bank of America, declared that quantum computing will be “bigger than fire and bigger than all the revolutions that humanity has seen.” Even among scientists, a slew of claims and vicious counterclaims have made it a hard field to assess.

Ultimately, though, assessing our progress in building useful quantum computers comes down to one central factor: whether we can handle the noise. The delicate nature of quantum systems makes them extremely vulnerable to the slightest disturbance, whether that’s a stray photon created by heat, a random signal from the surrounding electronics, or a physical vibration.This noise wreaks havoc, generating errors or even stopping a quantum computation in its tracks. It doesn’t matter how big your processor is, or what the killer applications might turn out to be: unless noise can be tamed, a quantum computer will never surpass what a classical computer can do.

For many years, researchers thought they might just have to make do with noisy circuitry, at least in the near term—and many hunted for applications that might do something useful with that limited capacity. The hunt hasn’t gone particularly well, but that may not matter now. In the last couple of years, theoretical and experimental breakthroughs have enabled researchers to declare that the problem of noise might finally be on the ropes. A combination of hardware and software strategies is showing promise for suppressing, mitigating, and cleaning up quantum errors. It’s not an especially elegant approach, but it does look as if it could work—and sooner than anyone expected.

“I’m seeing much more evidence being presented in defense of optimism,” says Earl Campbell, vice president of quantum science at Riverlane, a quantum computing company based in Cambridge, UK.

Even the hard-line skeptics are being won over. University of Helsinki professor Sabrina Maniscalco, for example, researches the impact of noise on computations. A decade ago, she says, she was writing quantum computing off. “I thought there were really fundamental issues. I had no certainty that there would be a way out,” she says. Now, though, she is working on using quantum systems to design improved versions of light-activated cancer drugs that are effective at lower concentrations and can be activated by a less harmful form of light. She thinks the project is just two and a half years from success. For Maniscalco, the era of “quantum utility”—the point at which, for certain tasks, it makes sense to use a quantum rather than a classical processor—is almost upon us. “I’m actually quite confident about the fact that we will be entering the quantum utility era very soon,” she says.

Putting qubits in the cloud

This breakthrough moment comes after more than a decade of creeping disappointment. Throughout the late 2000s and the early 2010s, researchers building and running real-world quantum computers found them to be far more problematic than the theorists had hoped.

To some people, these problems seemed insurmountable. But others, like Jay Gambetta, were unfazed.

A quiet-spoken Australian, Gambetta has a PhD in physics from Griffith University, on Australia’s Gold Coast. He chose to go there in part because it allowed him to feed his surfing addiction. But in July 2004, he wrenched himself away and skipped off to the Northern Hemisphere to do research at Yale University on the quantum properties of light. Three years later (by which time he was an ex-surfer thanks to the chilly waters around New Haven), Gambetta moved even further north, to the University of Waterloo in Ontario, Canada. Then he learned that IBM wanted to get a little more hands-on with quantum computing. In 2011, Gambetta became one of the company’s new hires.

IBM’s quantum engineers had been busy building quantum versions of the classical computer’s binary digit, or bit. In classical computers, the bit is an electronic switch, with two states to represent 0 and 1. In quantum computers, things are less black and white. If isolated from noise, a quantum bit, or “qubit,” can exist in a probabilistic combination of those two possible states, a bit like a coin in mid-toss. This property of qubits, along with their potential to be “entangled” with other qubits, is the key to the revolutionary possibilities of quantum computing.

A year after joining the company, Gambetta spotted a problem with IBM’s qubits: everyone could see that they were getting pretty good. Whenever he met up with his fellow physicists at conferences, they would ask him to test out their latest ideas on IBM’s qubits. Within a couple of years, Gambetta had begun to balk at the volume of requests. “I started thinking that this was insane—why should we just run experiments for physicists?” he recalls.

“We watched the first jobs come in. We could see them pinging on the quantum computer. When it didn’t break, we started to relax.”

Jay Gambetta

It occurred to him that his life might be easier if he could find a way for physicists to operate IBM’s qubits for themselves—maybe via cloud computing. He mentioned it to his boss, and then he found himself with five minutes to pitch the idea to IBM’s executives at a gathering in late 2014. The only question they asked was whether Gambetta was sure he could pull it off. “I said yes,” he says. “I thought, how hard can it be?”

Very hard, it turned out, because IBM’s executives told Gambetta he had to get it done quickly. “I wanted to spend two years doing it,” he says. They gave him a year.

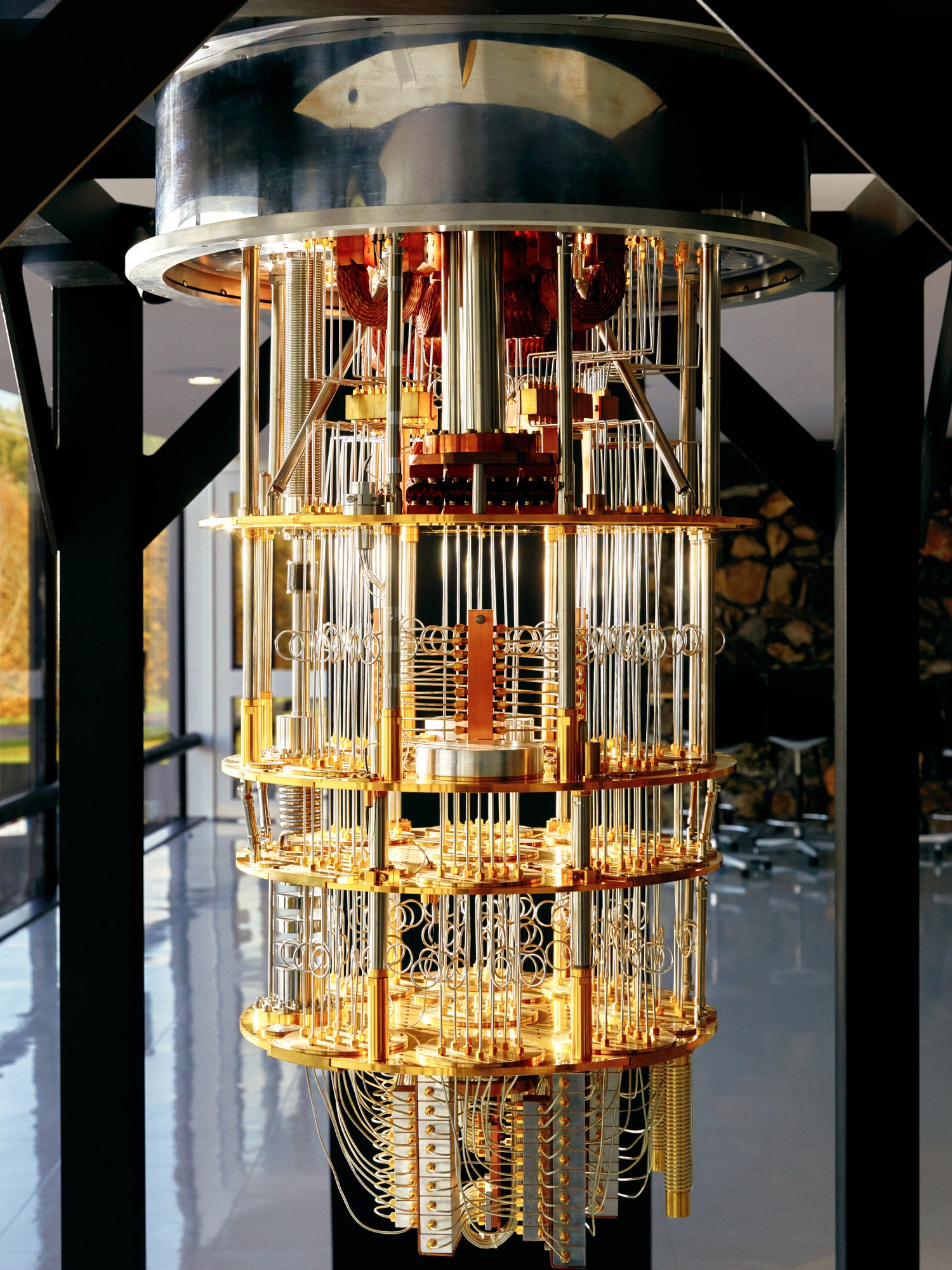

It was a daunting challenge: he barely knew what the cloud was back then. Fortunately, some of his colleagues did, and they were able to upgrade the team’s remote access protocols—useful for tweaking the machine in the evening or on the weekend—to create a suite of interfaces that could be accessed from anywhere in the world. The world’s first cloud-access quantum computer, built using five qubits, went live at midnight on May the 4th, 2016. The date, Star Wars Day, was chosen by nerds, for nerds. “I don’t think anyone in upper management was aware of that,” Gambetta says, laughing.

Not that upper management’s reaction to the launch date was uppermost in his mind. Of far more concern, he says, was whether a system reflecting years of behind-the-scenes development work would survive being hooked up to the real world. “We watched the first jobs come in. We could see them pinging on the quantum computer,” he says. “When it didn’t break, we started to relax.”

Cloud-based quantum computing was an instant hit. Seven thousand people signed up in the first week, and there were 22,000 registered users by the end of the month. Their ventures made it clear, however, that quantum computing had a big problem.

The field’s eventual aim is to have hundreds of thousands, if not millions, of qubits working together. But when it became possible for researchers to test out quantum computers with just a few qubits working together, many theory-based assumptions about how much noise they would generate turned out to be seriously off.

Some noise was always in the cards. Because they operate at temperatures above absolute zero, where thermal radiation is always present, everyone expected some random knocks to the qubits. But there were nonrandom knocks too. Changing temperatures in the control electronics created noise. Applying pulses of energy to put the qubits in the right states created noise. And worst of all, it turned out that sending a control signal to one qubit created noise in other, nearby qubits. “You’re manipulating a qubit and another one over there feels it,” says Michael Biercuk, director of the Quantum Control Laboratory at the University of Sydney in Australia.

By the time quantum algorithms were running on a dozen or so qubits, the performance was consistently shocking. In a 2022 assessment, Biercuk and others calculated the probability that an algorithm would run successfully before noise destroyed the information held in the qubits and forced the computation off track. If an algorithm with a known correct answer was run 30,000 times, say, the correct answer might be returned only three times.

Though disappointing, it was also educational. “People learned a lot about these machines by actually using them,” Biercuk says. “We found a lot of stuff that more or less nobody knew about—or they knew and had no idea what to do about it.”

Fixing the errors

Once they had recovered from this noisy slap, researchers began to rally. And they have now come up with a set of solutions that can work together to bring the noise under control.

Broadly speaking, solutions can be classed into three categories. The base layer is error suppression. This works through classical software and machine-learning algorithms, which continually analyze the behavior of the circuits and the qubits and then reconfigure the circuit design and the way instructions are given so that the information held in the qubits is better protected. This is one of the things that Biercuk’s company, Q-CTRL, works on; suppression, the company says, can make quantum algorithms 1,000 times more likely to produce a correct answer.

The next layer, error mitigation, uses the fact that not all errors cause a computation to fail; many of them will just steer the computation off track. By looking at the errors that noise creates in a particular system running a particular algorithm, researchers can apply a kind of “anti-noise” to the quantum circuit to reduce the chances of errors during the computation and in the output. This technique, something akin to the operation of noise-canceling headphones, is not a perfect fix. It relies, for instance, on running the algorithm multiple times, which increases the cost of operation, and the algorithm only estimates the noise. Nonetheless, it does a decent job of reducing errors in the final output, Gambetta says.

Helsinki-based Algorithmiq, where Maniscalco is CEO, has its own way of cleaning up noise after the computation is done. “It basically eliminates the noise in post-processing, like cleaning up the mess from the quantum computer,” Maniscalco says. So far, it seems to work at reasonably large scales.

On top of all that, there has been a growing roster of achievements in “quantum error correction,” or QEC. Instead of holding a qubit’s worth of information in one qubit, QEC encodes it in the quantum states of a set of qubits. A noise-induced error in any one of those is not as catastrophic as it would be if the information were held by a single qubit: by monitoring each of the additional qubits, it’s possible to detect any change and correct it before the information becomes unusable.

Implementing QEC has long been considered one of the essential steps on the path to large-scale, noise-tolerant quantum computing—to machines that can achieve all the promise of the technology, such as the ability to crack popular encryption schemes. The trouble is, QEC uses a lot of overhead. The gold-standard error correction architecture, known as a surface code, requires at least 13 physical qubits to protect a single useful “logical” qubit. As you connect logical qubits together, that number balloons: a useful processor might require 1,000 physical qubits for every logical qubit.

There are now multiple reasons to be optimistic even about this, however. In July 2022, for instance, Google’s researchers published a demonstration of a surface code in action where performance got better—not worse—when more qubits were connected together.

That so many noise-handling techniques are flourishing is a huge deal—especially at a time when the notion that we might get something useful out of small-scale, noisy processors has turned out to be a bust.

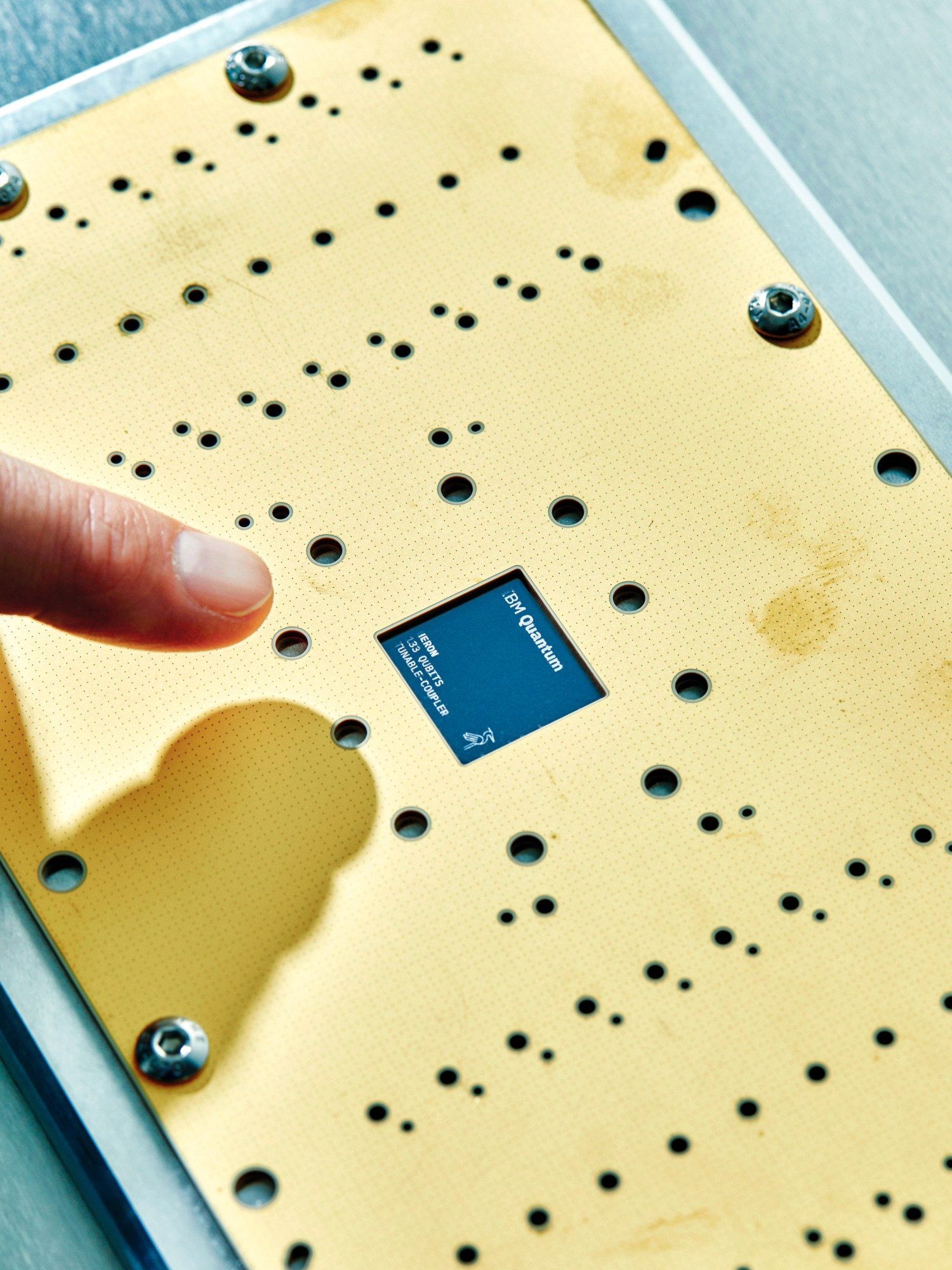

There have also been promising demonstrations of theoretical alternatives to surface codes. In August 2023, an IBM team that included Gambetta showed an error correction technique that could control the errors in a 12-qubit memory circuit using an extra 276 qubits, a big improvement over the thousands of extra qubits required by surface codes.

In September, two other teams demonstrated similar improvements with a fault-tolerant circuit called a CCZ gate, using superconducting circuitry and ion-trap processors.

That so many noise-handling techniques are flourishing is a huge deal—especially at a time when the notion that we might get something useful out of small-scale, noisy processors has turned out to be a bust.

Actual error correction is not yet happening on commercially available quantum processors (and is not generally implementable as a real-time process during computations). But Biercuk sees quantum computing as finally hitting its stride. “I think we’re well on the way now,” he says. “I don’t see any fundamental issues at all.”

And these innovations are happening alongside general improvements in hardware performance—meaning that there are ever fewer baseline errors in the functioning qubits—and an increase in the number of qubits on each processor, making bigger and more useful calculations possible. Biercuk says he is starting to see places where he might soon choose a quantum computer over the best-performing classical machines. Neither a classical nor a quantum computer can fully solve large-scale tasks like finding the optimal routes for a nationwide fleet of delivery trucks. But, Biercuk points out, accessing and running the best classical supercomputers costs a great deal of money—potentially more than accessing and running a quantum computer that might even give a slightly better solution.

“Look at what high-performance computing centers are doing on a daily basis,” says Kuan Tan, CTO and cofounder of the Finland-based quantum computer provider IQM. “They’re running power-hungry scientific calculations that are reachable [by] quantum computers that will consume much less power.” A quantum computer doesn’t have to be a better computer than any other kind of machine to attract paying customers, Tan says. It just has to be comparable in performance and cheaper to run. He expects we’ll achieve that quantum energy advantage in the next three to five years.

Finding utility

A debate has long raged about what target quantum computing researchers should be aiming for in their bid to compete with classical computers. Quantum supremacy, the goal Google has pursued—a demonstration that a quantum computer can solve a problem no classical computer can crack in a reasonable amount of time? Or quantum advantage—superior performance when it comes to a useful problem—as IBM has preferred? Or quantum utility, IBM’s newest buzzword? The semantics reflect differing views of what near-term objectives are important.

In June, IBM announced that it would begin retiring its entry-level processors from the cloud, so that its 127-qubit Eagle processor would be the smallest one that the company would make available. The move is aimed at pushing researchers to prioritize truly useful tasks. Eagle is a “utility-scale” processor, IBM says—when correctly handled, it can “provide useful results to problems that challenge the best scalable classical methods.”

It’s a controversial claim—many doubt that Eagle really is capable of outperforming suitably prepared classical machines. But classical computers are already struggling to keep up with it, and IBM has even larger systems: the 433-qubit Osprey processor, which is also cloud-accessible, and the 1,121-qubit Condor processor, which debuted in December. (Gambetta has a simple rationale for the way he names IBM’s quantum processors: “I like birds.”) The company has a new modular design, called Heron, and Flamingo is slated to appear in 2025—with fully quantum connections between chips that allow the quantum information to flow between different processors unhindered, enabling truly large-scale quantum computation. That will make 2025 the first year that quantum computing will be provably scalable, Gambetta says: “I’m aiming for 2025 to be an important year for demonstrating key technologies that allow us to scale to hundreds of thousands of qubits.”

IQM’s Tan is astonished at the pace of development. “It’s mind-boggling how fast this field is progressing,” he says. “When I was working in this field 10 years ago, I would never have expected to have a 10-qubit chip at this point. Now we’re talking about hundreds already, and potentially thousands in the coming years.”

It’s not just IBM. Campbell has been impressed by Google’s quiet but emphatic progress, for instance. “They operate differently, but they have hit the milestones on their public road map,” he says. “They seem to be doing what they say they will do.” Other household-name companies are embracing quantum computing too. “We’re seeing Intel using their top-line machines, the ones that they use for making chips, to make quantum devices,” Tan says. Intel is following a technology path very different from IBM’s: creating qubits in silicon devices that the company knows how to manufacture at scale, with minimal noise-inducing defects.

As quantum computing hits its stride and quantum computers begin to process real-world data, technological and geographical diversity will be important to avoid geopolitical issues and problems with data-sharing regulations.

There are restrictions, for instance, aimed at maintaining national security—which will perhaps limit the market opportunities of multinational giants such as IBM and Google. At the beginning of 2022, France’s defense minister declared quantum technologies to be of “strategic interest” while announcing a new national program of research. In July 2023, Deutsche Telekom announced a new partnership with IQM for cloud-based access to quantum computing, calling it a way for DT customers to access a “truly sovereign quantum environment, built and managed from within Europe.”

This is not just nationalistic bluster: sovereignty matters. DT is leading the European Commission’s development of a quantum-based, EU-wide high-security communications infrastructure; as the era approaches when large-scale quantum computers pose a serious threat to standard encryption protocols, governments and commercial organizations will want to be able to test “post-quantum” encryption algorithms—ones that withstand attack by any quantum computer, irrespective of its size—within their own borders.

Not that this is a problem yet. Few people think that a security-destroying large-scale quantum processor is just around the corner. But there is certainly a growing belief in the field’s potential to be transformative—and useful—in other ways within just a few years. And these days, that belief is based on real-world achievements. “At Algorithmiq, we believe in a future where quantum utility will happen soon, but I can trace this optimism back to patents and publications,” Maniscalco says.

The only downside for her is that not everybody has come around the way she has. Quantum computing is here now, she insists—but the old objections die hard, and many people refuse to see it.

“There is still a lot of misunderstanding: I get very upset when I see or hear certain conversations,” she says. “Sometimes I wish I had a magic wand that could open people’s eyes.”

Michael Brooks is a freelance science journalist based in the UK.