Inside the hunt for new physics at the world’s largest particle collider

In 1977, Ray and Charles Eames released a remarkable film that, over the course of just nine minutes, spanned the limits of human knowledge. Powers of Ten begins with an overhead shot of a man on a picnic blanket inside a one-square-meter frame. The camera pans out: 10, then 100 meters, then a kilometer, and eventually all the way to the then-known edges of the observable universe—1024 meters. There, at the farthest vantage, it reverses. The camera zooms back in, flying through galaxies to arrive at the picnic scene, where it plunges into the man’s skin, digging down through successively smaller scales: tissues, cells, DNA, molecules, atoms, and eventually atomic nuclei—10-14 meters. The narrator’s smooth voice-over ends the journey: “As a single proton fills our scene, we reach the edge of present understanding.”

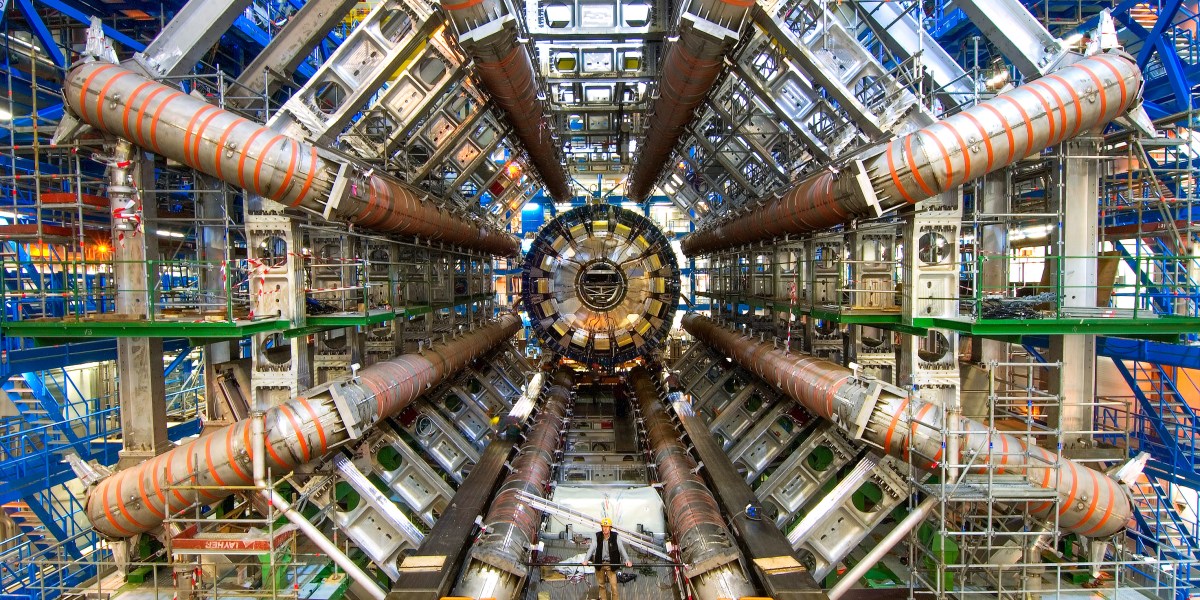

During the intervening half-century, particle physicists have been exploring the subatomic landscape where Powers of Ten left off. Today, much of this global effort centers on CERN’s Large Hadron Collider (LHC), an underground ring 17 miles (27 kilometers) around that straddles the border between Switzerland and France. There, powerful magnets guide hundreds of trillions of protons as they do laps at nearly the speed of light underneath the countryside. When a proton headed clockwise plows into a proton headed counterclockwise, the churn of matter into energy transmutes the protons into debris: electrons, photons, and more exotic subatomic bric-a-brac. The newly created particles explode radially outward, where they are picked up by detectors.

In 2012, using data from the LHC, researchers discovered a particle called the Higgs boson. In the process, they answered a nagging question: Where do fundamental particles, such as the ones that make up all the protons and neutrons in our bodies, get their mass? A half-century earlier, theorists had cautiously dreamed the Higgs boson up, along with an accompanying field that would invisibly suffuse space and provide mass to particles that interact with it. When the particle was finally found, scientists celebrated with champagne. A Nobel for two of the physicists who predicted the Higgs boson soon followed.

But now, more than a decade after the excitement of finding the Higgs, there is a sense of unease, because there are still unanswered questions about the fundamental constituents of the universe.

Perhaps the most persistent of these questions is the identity of dark matter, a mysterious substance that binds galaxies together and makes up 27% of the cosmos’s mass. We know dark matter must exist because we have astronomical observations of its gravitational effects. But since the discovery of the Higgs, the LHC has seen no new particles—of dark matter or anything else—despite nearly doubling its collision energy and quintupling the amount of data it can collect. Some physicists have said that particle physics is in a “crisis,” but there is disagreement even on that characterization: another camp insists the field is fine and still others say that there is indeed a crisis, but that crisis is good. “I think the community of particle phenomenologists is in a deep crisis, and I think people are afraid to say those words,” says Yoni Kahn, a theorist at the University of Illinois Urbana-Champaign.

The anxieties of particle physicists may, at first blush, seem like inside baseball. In reality, they concern the universe, and how we can continue to study it—of interest if you care about that sort of thing. The past 50 years of research have given us a spectacularly granular view of nature’s laws, each successive particle discovery clarifying how things really work at the bottom. But now, in the post-Higgs era, particle physicists have reached an impasse in their quest to discover, produce, and study new particles at colliders. “We do not have a strong beacon telling us where to look for new physics,” Kahn says.

So, crisis or no crisis, researchers are trying something new. They are repurposing detectors to search for unusual-looking particles, squeezing what they can out of the data with machine learning, and planning for entirely new kinds of colliders. The hidden particles that physicists are looking for have proved more elusive than many expected, but the search is not over—nature has just forced them to get more creative.

An almost-complete theory

As the Eameses were finishing Powers of Ten in the late ’70s, particle physicists were bringing order to a “zoo” of particles that had been discovered in the preceding decades. Somewhat drily, they called this framework, which enumerated the kinds of particles and their dynamics, the Standard Model.

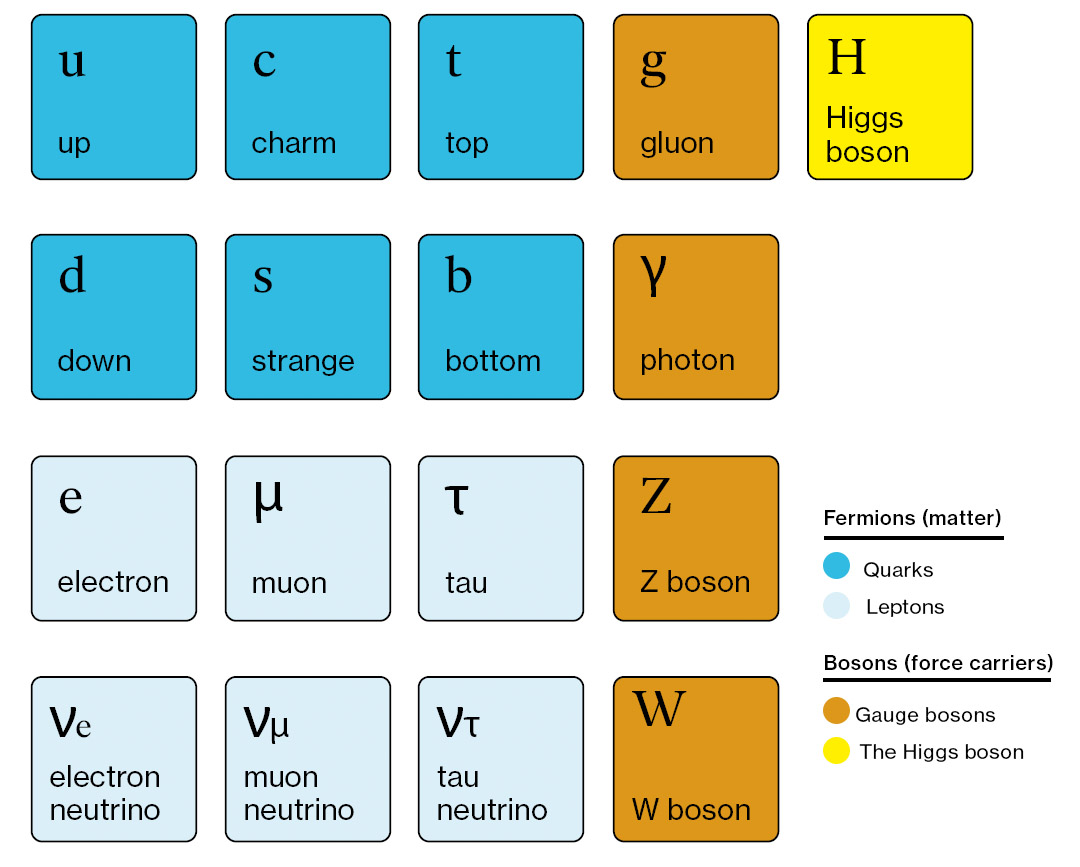

Roughly speaking, the Standard Model separates fundamental particles into two types: fermions and bosons. Fermions are the bricks of matter—two kinds of fermions called up and down quarks, for example, are bound together into protons and neutrons. If those protons and neutrons glom together and find an electron (or electrons) to orbit them, they become an atom. Bosons, on the other hand, are the mortar between the bricks. Bosons are responsible for all the fundamental forces besides gravity: electromagnetism; the weak force, which is involved in radioactive decay; and the strong force, which binds nuclei together. To transmit a force between one fermion and another, there must be a boson to act as a messenger. For example, quarks feel the attractive power of the strong force because they send and receive bosons called gluons.

The Standard Model

This framework unites three out of four fundamental forces and tamed an unruly zoo into just 17 elementary particles.

Quarks are bound together by gluons. They form composite particles called hadrons, the most stable of which are protons and neutrons, the components of atomic nuclei.

Leptons can be charged or neutral. The charged leptons are the electron, muon, and tau. Each of these has a neutral neutrino counterpart.

Gauge bosons convey forces. Gluons carry the strong force; photons carry the electromagnetic force; and W and Z bosons carry the weak force, which is involved in radioactive processes.

The Higgs boson is the fundamental particle associated with the Higgs field, a field that permeates the entire universe and gives mass to other fundamental particles.

Nearly 50 years later, the Standard Model remains superbly successful; even under stress tests, it correctly predicts fundamental properties of the universe, like the magnetic properties of the electron and the mass of the Z boson, to extremely high accuracy. It can reach well past where Powers of Ten left off, to the scale of 10-20 meters, roughly a 10,000th the size of a proton. “It’s remarkable that we have a correct model for how the world works down to distances of 10-20 meters. It’s mind blowing,” says Seth Koren, a theorist at the University of Notre Dame, in Indiana.

Despite its accuracy, physicists have their pick of questions the Standard Model doesn’t answer—what dark matter actually is, why matter dominates over antimatter when they should have been made in equal amounts in the early universe, and how gravity fits into the picture.

Over the years, thousands of papers have suggested modifications to the Standard Model to address these open questions. Until recently, most of these papers relied on the concept of supersymmetry, abbreviated to the friendlier “SUSY.” Under SUSY, fermions and bosons are actually mirror images of one another, so that every fermion has a boson counterpart, and vice versa. The photon would have a superpartner dubbed a “photino” in SUSY parlance, while an electron would have a “selectron.” If these particles were high in mass, they would be “hidden,” unseen unless a sufficiently high-energy collision left them as debris. In other words, to create these heavy superpartners, physicists needed a powerful particle collider.

It might seem strange, and overly complicated, to double the number of particles in the universe without direct evidence. SUSY’s appeal was in its elegant promise to solve two tricky problems. First, superpartners would explain the Higgs boson’s oddly low mass. The Higgs is about 100 times more massive than a proton, but the math suggests it should be 100 quadrillion times more massive. (SUSY’s quick fix is this: every particle that interacts with the Higgs contributes to its mass, causing it to balloon. But each superpartner would counteract its ordinary counterpart’s contribution, getting the mass of the Higgs under control.) The second promise of SUSY: those hidden particles would be ideal candidates for dark matter.

SUSY was so nifty a fix to the Standard Model’s problems that plenty of physicists thought they would find superpartners before they found the Higgs boson when the LHC began taking data in 2010. Instead, there has been resounding silence. Not only has there been no evidence for SUSY, but many of the most promising scenarios where SUSY particles would solve the problem of the Higgs mass have been ruled out.

At the same time, many non-collider experiments designed to directly detect the kind of dark matter you’d see if it were made up of superpartners have come up empty. “The lack of evidence from both direct detection and the LHC is a really strong piece of information the field is still kind of digesting,” Kahn says.

project at CERN where civil engineering work has been completed. The upgrade, which is set to be completed by the end of the 2020s, will send more protons into the collider’s beams, creating more collisions and thus more data.

Many younger researchers—like Sam Homiller, a theorist at Harvard University—are less attached to the idea. “[SUSY] would have been a really pretty story,” says Homiller. “Since I came in after it … it’s just kind of like this interesting history.”

Some theorists are now directing their search away from particle accelerators and toward other sources of hidden particles. Masha Baryakhtar, a theorist at the University of Washington, uses data from stars and black holes. “These objects are really high density, often high temperature. And so that means that they have a lot of energy to give up to create new particles,” Baryakhtar says. In their nuclear furnaces, stars might produce loads and loads of another dark matter candidate called the axion. There are experiments on Earth that aim to detect such particles as they reach us. But if a star is expending energy to create axions, there will also be telltale signs in astronomical observations. Baryakhtar hopes these celestial bodies will be a useful complement to detectors on Earth.

Other researchers are finding ways to give new life to old ideas like SUSY. “I think SUSY is wonderful—the only thing that’s not wonderful is that we haven’t found it,” quips Karri DiPetrillo, an experimentalist at the University of Chicago. She points out that SUSY is far from being ruled out. In fact, some promising versions of SUSY that account for dark matter (but not the Higgs mass) are completely untested.

After initial investigations did not find SUSY in the most obvious places, many researchers began looking for “long-lived particles” (LLPs), a generic class of potential particles that includes many possible superpartners. Because detectors are primarily designed to see particles that decay immediately, spotting LLPs challenges researchers to think creatively.

“You need to know the details of the experiment that you’re working on in a really intimate way,” DiPetrillo says. “That’s the dream—to really be using your experiment and pushing it to the max.”

The two general-purpose detectors at the LHC, ATLAS and CMS, are a bit like onions, with concentric layers of particle-tracking hardware. Most of the initial mess from proton collisions—jets and showers of quarks—decays immediately and gets absorbed by the inner layers of the onion. The outermost layer of the detector is designed to spot the clean, arcing paths of muons, which are heavier versions of electrons. If an LLP created in the collision made it to the muon tracker and then decayed, the particle trajectory would be bizarre, like a baseball hit from first base instead of home plate. A recent search by the CMS collaboration used this approach to search for LLPs but didn’t spot any evidence for them.

Researchers scouring the data often don’t have any faith that any particular search will turn up new physics, but they feel a responsibility to search all the same. “We should do everything in our power to make sure we leave no stone unturned,” DiPetrillo says. “The worst thing about the LHC would be if we were producing SUSY particles and we didn’t find them.”

Needles in high-energy haystacks

Searching for new particles isn’t just a matter of being creative with the hardware; it’s also a software problem. While it’s running, the LHC generates about a petabyte of collision data per second—a veritable firehose of information. Less than 1% of that gets saved, explains Ben Nachman, a data physicist at Lawrence Berkeley National Lab: “We just can’t write a petabyte per second to tape right now.”

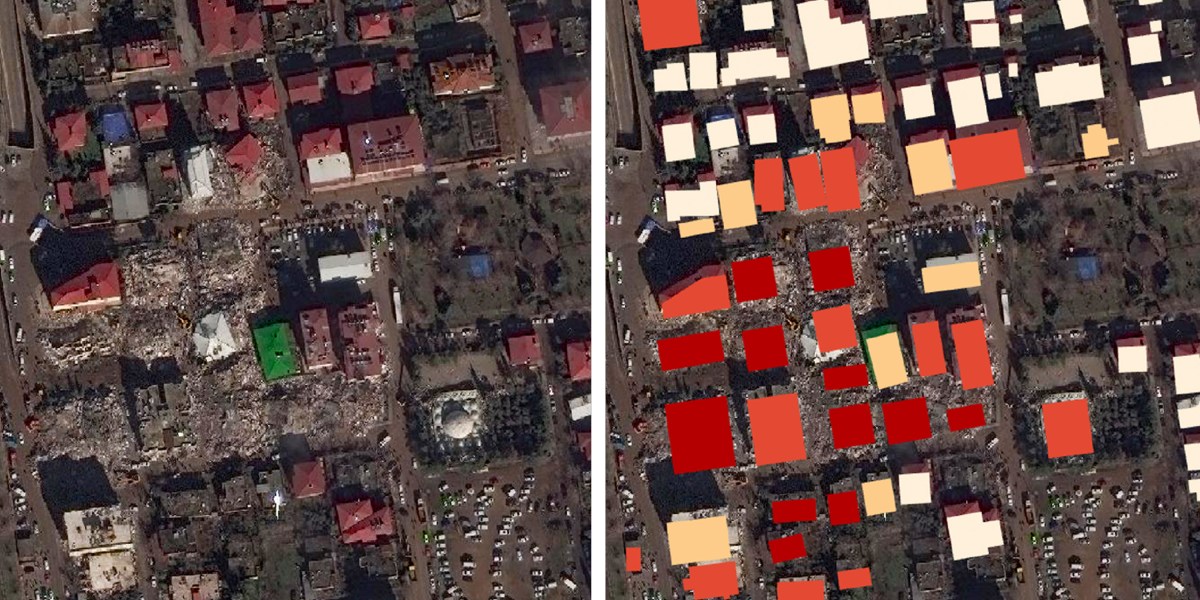

Dealing with that data will only become more important in the coming years as the LHC receives its “high luminosity” upgrade. Starting at the end of the decade, the HL-LHC will operate at the same energy, but it will record about 10 times more data than the LHC has accumulated so far. The boost will come from an increase in beam density: stuffing more protons into the same space leads to more collisions, which translates to more data. As the frame fills with dozens of collisions, the detector begins to look like a Jackson Pollock painting, with splashes of particles that are impossible to disentangle.

To handle the increasing data load and search for new physics, particle physicists are borrowing from other disciplines, like machine learning and math. “There’s a lot of room for creativity and exploration, and really just kind of thinking very broadly,” says Jessica Howard, a phenomenologist at the University of California, Santa Barbara.

One of Howard’s projects involves applying optimal transport theory, an area of mathematics concerned with moving stuff from one place to the next, to particle detection. (The field traces its roots to the 18th century, when the French mathematician Gaspard Monge was thinking about the optimal way to excavate earth and move it.) Conventionally, the “shape” of a particle collision—roughly, the angles at which the particles fly out—has been described by simple variables. But using tools from optimal transport theory, Howard hopes to help detectors be more sensitive to new kinds of particle decays that have unusual shapes, and better able to handle the HL-LHC’s higher rates of collisions.

As with many new approaches, there are doubts and kinks to work out. “It’s a really cute idea, but I have no idea what it’s useful for at the moment,” Nachman says of optimal transport theory. He is a proponent of novel machine-learning approaches, some of which he hopes will allow researchers to do entirely different kinds of searches and “look for patterns that we couldn’t have otherwise found.”

Though particle physicists were early adopters and have been using machine learning since the late 1990s, the past decade of advances in deep learning has dramatically changed the landscape.

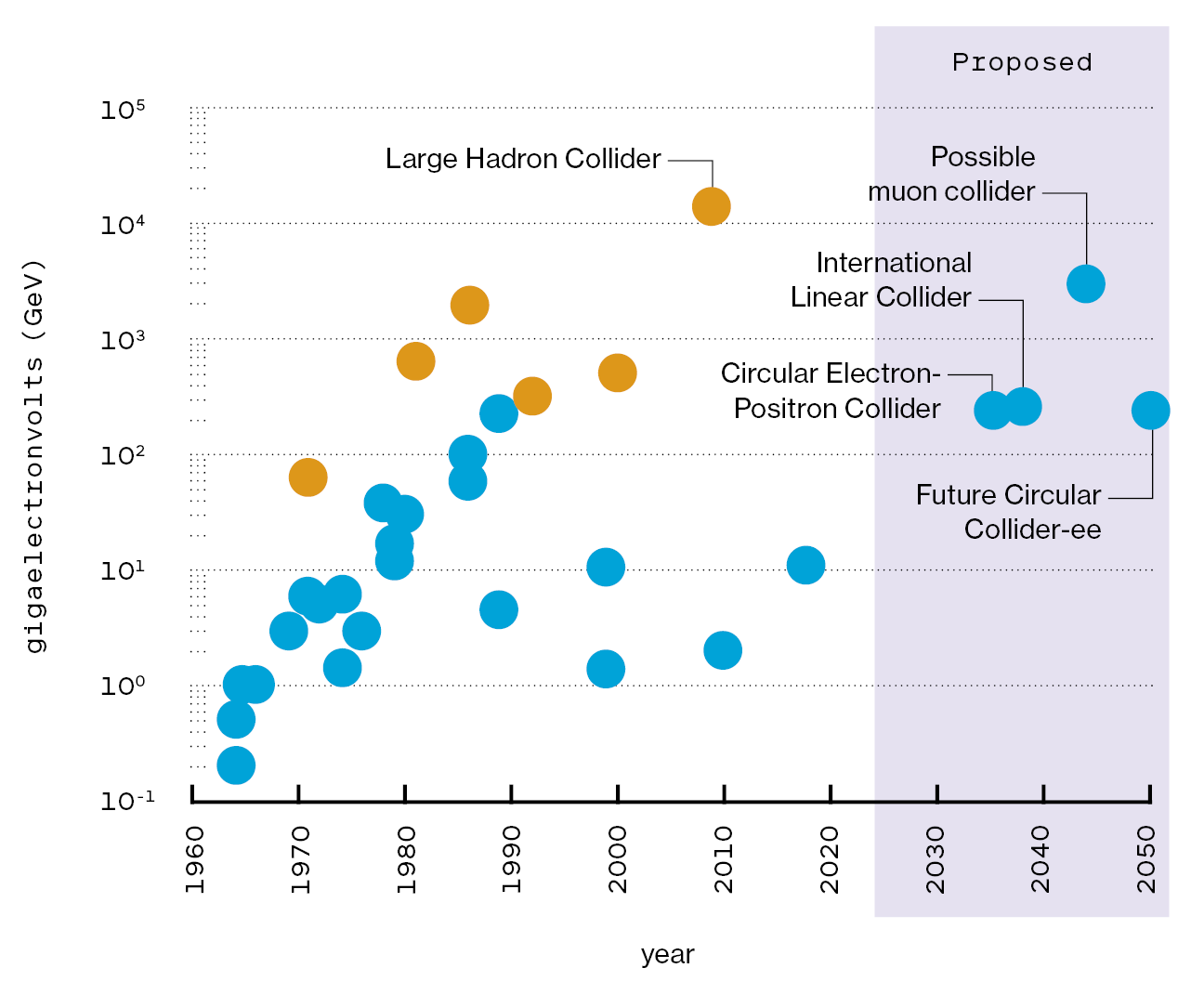

Packing more power

The energy of particle colliders (as measured by the combined energy of two colliding particles) has risen over the decades, opening up new realms of physics to explore.

Collisions between leptons, such as electrons and positrons, are efficient and precise, but limited in energy. Among potential future projects is the possibility of colliding muons, which would give a big jump in collision energy.

Collisions between hadrons, such as protons and antiprotons, have high energy but limited precision. Although it would start with electrons (rightmost point), a possible Future Circular Collider could reach 100,000 (105) GeV by colliding protons.

“[Machine learning] can almost always improve things,” says Javier Duarte, an experimentalist at the University of California, San Diego. In a hunt for needles in haystacks, the ability to change the signal-to-noise ratio is crucial. Unless physicists can figure out better ways to search, more data might not help much—it might just be more hay.

One of the most notable but understated applications for this kind of work is refining the picture of the Higgs. About 60% of the time, the Higgs boson decays into a pair of bottom quarks. Bottom quarks are tricky to find amid the mess of debris in the detectors, so researchers had to study the Higgs through its decays into an easy-to-spot photon pair, even though that happens only about 0.2% of the time. But in the span of a few years, machine learning has dramatically improved the efficiency of bottom-quark tagging, which allows researchers another way to measure the Higgs boson. “Ten years ago, people thought this was impossible,” Duarte says.

The Higgs boson is of central importance to physicists because it can tell them about the Higgs field, the phenomenon that gives mass to all the other elementary particles. Even though some properties of the Higgs boson have been well studied, like its mass, others—like the recursive way it interacts with itself—remain unknown with any kind of precision. Measuring those properties could rule out (or confirm) theories about dark matter and more.

What’s truly exciting about machine learning is its potential for a completely different class of searches called anomaly detection. “The Higgs is kind of the last thing that was discovered where we really knew what we were looking for,” Duarte says. Researchers want to use machine learning to find things they don’t know to look for.

In anomaly detection, researchers don’t tell the algorithm what to look for. Instead, they give the algorithm data and tell it to describe the data in as few bits of information as possible. Currently, anomaly detection is still nascent and hasn’t resulted in any strong hints of new physics, but proponents are eager to try it out on data from the HL-LHC.

Because anomaly detection aims to find anything that is sufficiently out of place, physicists call this style of search “model agnostic”—it doesn’t depend on any real assumptions.

Not everyone is fully on board. Some theorists worry that the approach will only yield more false alarms from the collider—more tentative blips in the data like “two-sigma bumps,” so named for their low level of statistical certainty. These are generally flukes that eventually disappear with more data and analysis. Koren is concerned that this will be even more the case with such an open-ended technique: “It seems they want to have a machine that finds more two-sigma bumps at the LHC.”

Nachman told me that he received a lot of pushback; he says one senior physicist told him, “If you don’t have a particular model in mind, you’re not doing physics.” Searches based on specific models, he says, have been amazingly productive—he points to the discovery of the Higgs boson as a prime example—but they don’t have to be the end of the story. “Let the data speak for themselves,” he says.

Building bigger machines

One thing particle physicists would really like in the future is more precision. The problem with protons is that each one is actually a bundle of quarks. Smashing them together is like a subatomic food fight. Ramming indivisible particles like electrons (and their antiparticles, positrons) into one another results in much cleaner collisions, like the ones that take place on a pool table. Without the mess, researchers can make far more precise measurements of particles like the Higgs.

An electron-positron collider would produce so many Higgs bosons so cleanly that it’s often referred to as a “Higgs factory.” But there are currently no electron-positron colliders that have anywhere near the energies needed to probe the Higgs. One possibility on the horizon is the Future Circular Collider (FCC). It would require digging an underground ring with a circumference of 55 miles (90 kilometers)—three times the size of the LHC—in Switzerland. That work would likely cost tens of billions of dollars, and the collider would not turn on until nearly 2050. There are two other proposals for nearer-term electron-positron colliders in China and Japan, but geopolitics and budgetary issues, respectively, make them less appealing prospects.

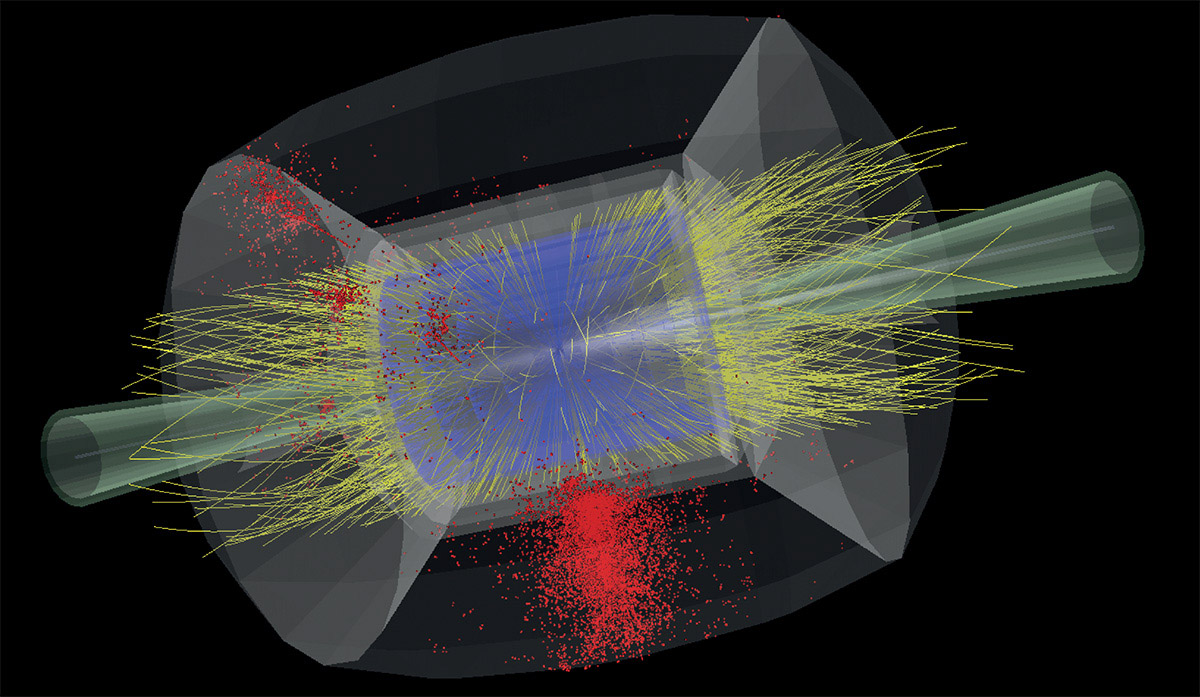

a muon collider. The simulation suggests it’s

possible to reconstruct information about the

Higgs boson from the bottom quarks (red dots) it decays into, despite the noisy environment.

Physicists would also like to go to higher energies. “The strategy has literally never failed us,” Homiller says. “Every time we’ve gone to higher energy, we’ve discovered some new layer of nature.” It will be nearly impossible to do so with electrons; because they have such a low mass, they radiate away about a trillion times more energy than protons every time they loop around a collider. But under CERN’s plan, the FCC tunnel could be repurposed to collide protons at energies eight times what’s possible in the LHC—about 50 years from now. “It’s completely scientifically sound and great,” Homiller says. “I think that CERN should do it.”

Could we get to higher energies faster? In December, the alliteratively named Particle Physics Project Prioritization Panel (P5) put forward a vision for the near future of the field. In addition to addressing urgent priorities like continued funding for the HL-LHC upgrade and plans for telescopes to study the cosmos, P5 also recommended pursuing a “muon shot”—an ambitious plan to develop technology to collide muons.

The idea of a muon collider has tantalized physicists because of its potential to combine both high energies and—since the particles are indivisible—clean collisions. It seemed well out of reach until recently; muons decay in just 2.2 microseconds, which makes them extremely hard to work with. Over the past decade, however, researchers have made strides, showing that, among other things, it should be possible to manage the roiling cloud of energy caused by decaying muons as they’re accelerated around the machine. Advocates of a muon collider also tout its smaller size (10 miles), its faster timeline (optimistically, as early as 2045), and the possibility of a US site (specifically, Fermi National Laboratory, about 50 miles west of Chicago).

There are plenty of caveats: a muon collider still faces serious technical, financial, and political hurdles—and even if it is built, there is no guarantee it will discover hidden particles. But especially for younger physicists, the panel’s endorsement of muon collider R&D is more than just a policy recommendation; it is a bet on their future. “This is exactly what we were hoping for,” Homiller says. “This opens a pathway to having this exciting, totally different frontier of particle physics in the US.” It’s a frontier he and others are keen to explore.

Dan Garisto is a freelance physics journalist based in New York City.