Facebook rolls out new tools for Group admins to manage their communities and reduce misinformation – TechCrunch

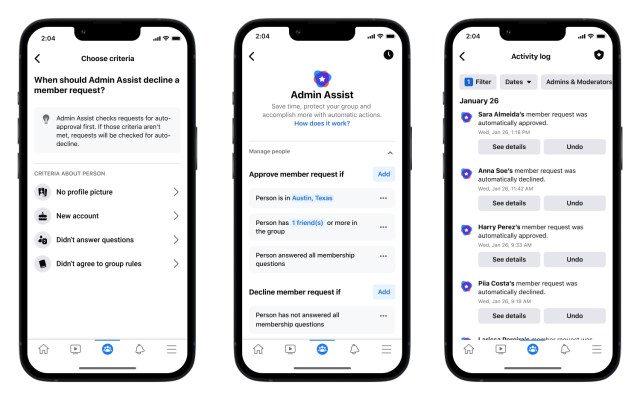

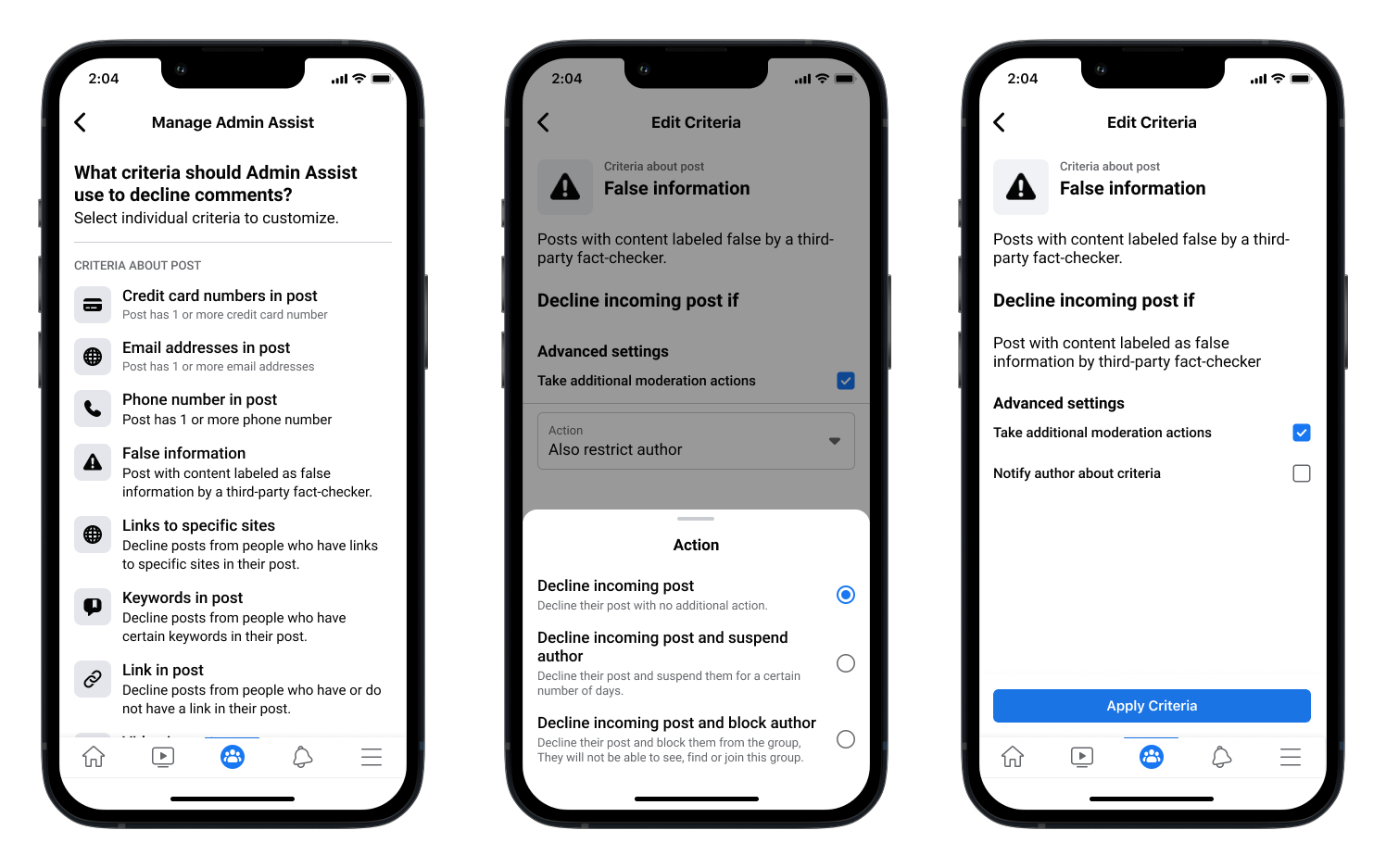

Facebook announced today that it’s rolling out new features to help Facebook Group administrators keep their communities safe, manage interactions and reduce misinformation. Most notably, the company has added the option for admins to automatically decline incoming posts that have been identified as containing false information by third-party checkers. Facebook says this new tool will help admins prevent the spread of misinformation in their group.

The company is also expanding its “mute” function and updating it to “suspend,” so admins can temporarily suspend participants from posting, commenting, reacting, participating in group chats and more. The new feature is designed to make it easier for admins to manage interactions in their groups and limit bad actors.

Image Credits: Meta

In addition, admins can now automatically approve or decline member requests based on specific criteria that they set up, like whether they’ve answered the member questions. The group’s “Admin Home” page is being updated, too, to include an overview section on the desktop to make it easier for admins to quickly review things that need attention. On mobile, there’s a new insights summary to help admins understand the growth and engagement of their groups.

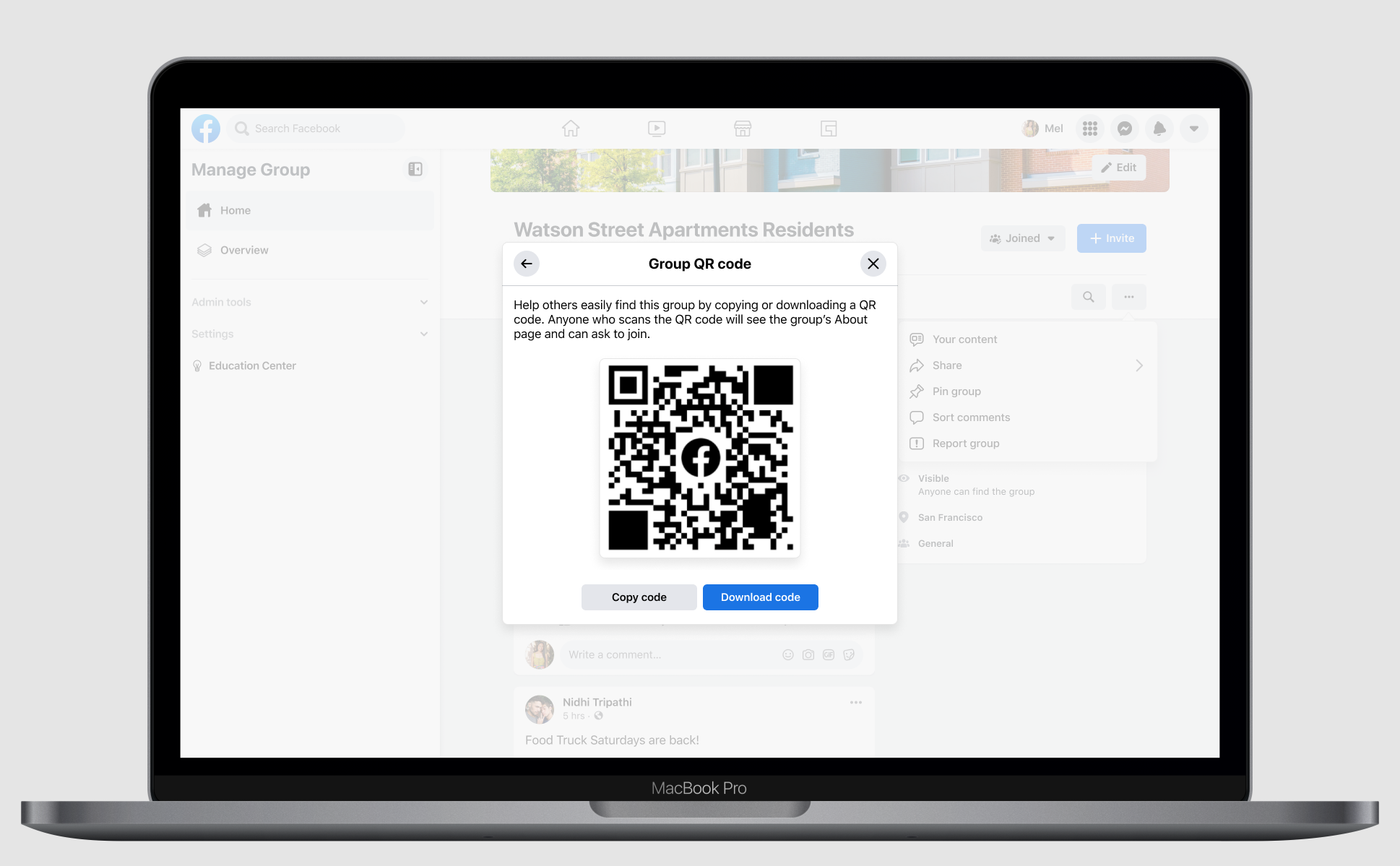

Facebook is also introducing new tools to help admins who want to grow their groups and find relevant people to join their communities.

The company has added the option for admins to send invites via email to invite people to join their group. It’s also added QR codes that admins can download and share as they like, including offline. When someone scans the QR code, they’ll be directed to the group’s “About” page where they can join or request to join.

The new changes have rolled out to all users globally.

Image Credits: Meta

Today’s announcement comes as Facebook Groups has made headlines over the past few years for their growing use by those looking to spread harmful content and misinformation. Facebook Groups, due to their often private nature, have become the breeding grounds for a wide range of dangerous content, including health misinformation, anti-science movements and conspiracy theories. The new features announced today focus on addressing some of these issues and giving admins more control over their communities, but they’re arriving years late to the battle against online misinformation.

This isn’t the first time that Facebook has given admins more control over their groups.

Last June, the company introduced a new set of tools aimed at helping Facebook Group administrators get a better handle on their online communities. Among the more interesting tools was a machine-learning-powered feature that alerts admins to potentially unhealthy conversations taking place in their group. Another feature gave admin’s the ability to slow down the pace of a heated conversation by limiting how often group members can post. At the time, Facebook had said there were “tens of millions” of groups that are managed by over 70 million active admins and moderators worldwide.

Along with working to ensure that admins have the tools they need to manage their groups, Facebook is also focused on enhancing its Groups product overall. At its Facebook Communities Summit in November, the social networking giant announced a series of updates for Facebook Groups, including tools designed to help admins better develop the group’s culture, as well as several other new additions like subgroups and subscription-based paid subgroups, real-time chat for moderators, support for community fundraisers and more. The company had said these changes were in anticipation of how Groups will play a role in parent company Meta’s upcoming plans for the “metaverse” it’s building.